Sustainable computer chips set to supercharge AI

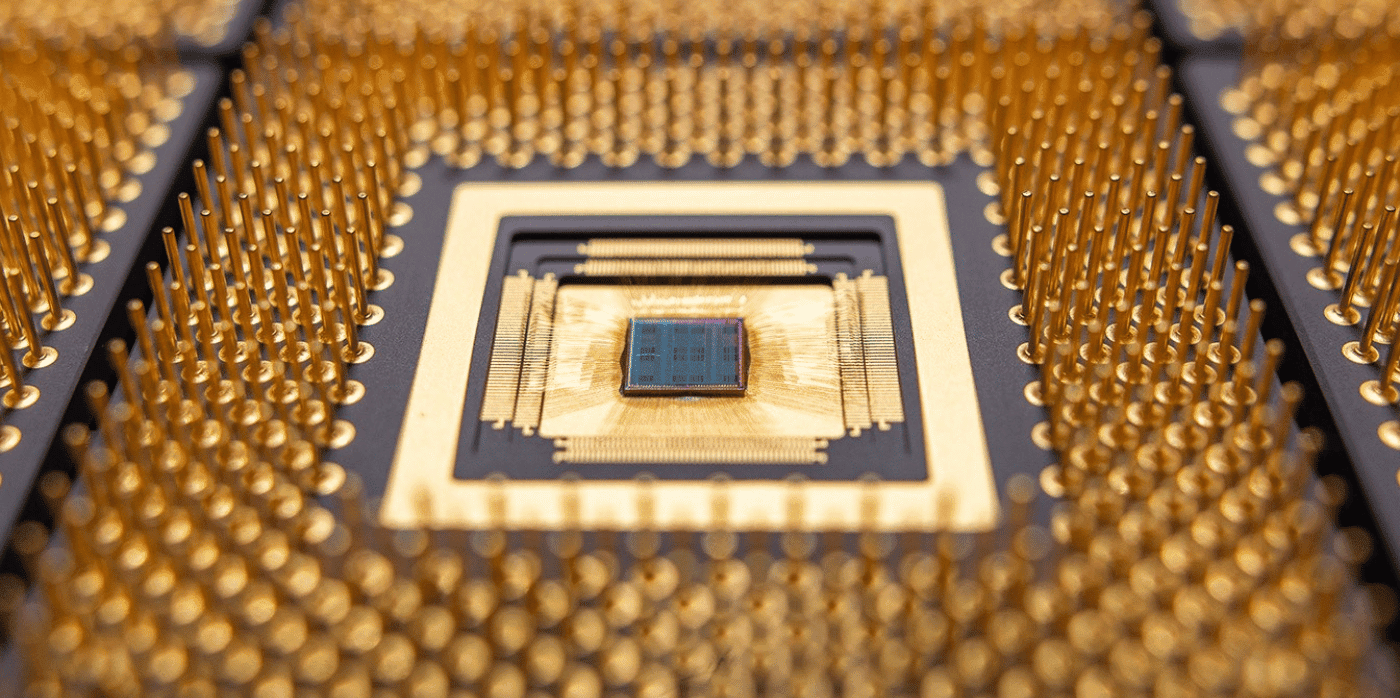

Spotted: It is clear that AI represents the future of computing, but today’s most popular AI chips (GPUs) are expensive and require huge amounts of energy, which is incompatible with both broader use outside data centres, and a low-carbon future. Now, EnCharge AI, which was spun out of the lab of Dr Naveen Verma at Princeton University, is building on new research outside of the GPU model to accelerate AI capabilities for a broader range of users.

To create chips that can handle modern AI in smaller and/or lower-energy environments, the researchers turned to analogue computing. The team designed capacitors to work with the analogue signal to switch on and off with extreme precision. By having this computation done directly in memory cells (in-memory computing), they created a chip that can run powerful AI systems using much less energy.

EnCharge’s PR Account Manager, Yhea Abdulla, explained to Springwise that, “It’s all geometry-based, aligning wires that come in a capacitor (no extra parts, costs, or special processing). They combined this with their research in in-memory computing (IMC) to enhance computing efficiency and data movement issues, bringing in the value of analogue without the historical limitations.”

EnCharge has recently been awarded an $18.6 million (around €17.2 million) grant by the US’s Defense Advanced Research Projects Agency (DARPA). The funding will be used to further develop the chip technology as part of DARPA’s Optimum Processing Technology Inside Memory Arrays (OPTIMA) programme to unlock new possibilities for commercial and defense-relevant AI workloads not achievable with current technology.

From climate forecasting to food waste and cancer detection, AI has already grown to the point where it is becoming incorporated into many aspects of daily life. This makes it vital that we reduce the energy needed to run AI applications.

Written By: Lisa Magloff